[1] What makes DeepSeek so special?

Mayank Pratap Singh

@Mayank Pratap Singh

![[1] What makes DeepSeek so special?](/_next/image?url=%2Fimages%2Fblog%2F1DS.png&w=1920&q=75)

DeepSeek has developed a series of LLMs with major optimizations in efficiency and reasoning capabilities.

Let's first see the different LLMs built by DeepSeek

Here is the website of Deepseek deepseek.com Go below in the footer to see various models

The main model that caught attention is DeepSeek-R1.

- DeepSeek LLM (V1) – Focused on math and coding.

- DeepSeek-V2 – Optimized for coding.

- DeepSeek-V3 (671B parameters) – A large-scale model.

- DeepSeek-R1 – A reasoning model.

DeepSeek-R1 offers performance comparable to OpenAI's top models at a fraction of the cost while being open-source.

DeepSeek = Fraction of the cost + Open Source

DeepSeek Version 3 has 671 billion parameters, and DeepSeek R1 is a model derived from DeepSeek V3.

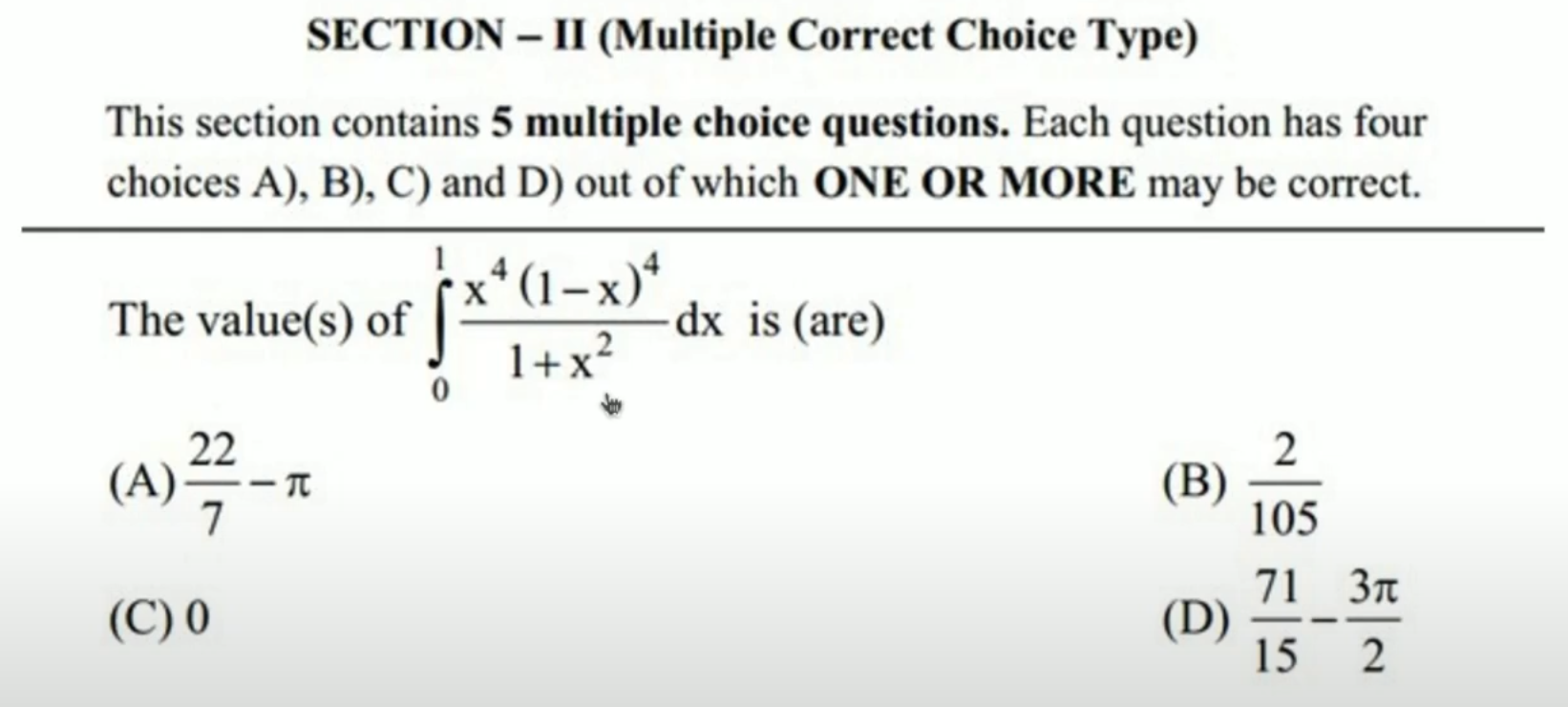

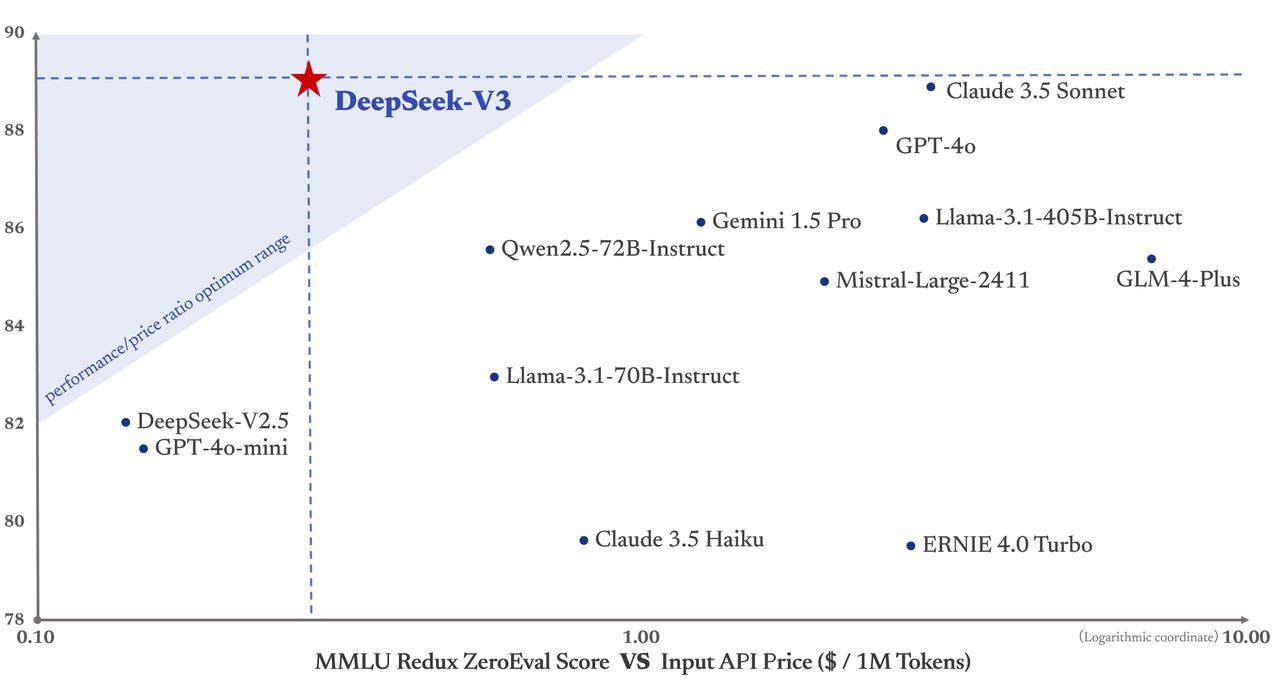

OpenAI vs DeepSeek (Math Puzzle Test)

Let's compare DeepSeek with an OpenAI model on a math problem involving integrals.

We have the following integral question:

The interesting aspect of this problem is its answer: 22/7 - π.

Proof that 22/7 exceeds π

Wikipedia [Proof that 22/7 exceeds]

We provided this problem to both LLMs:

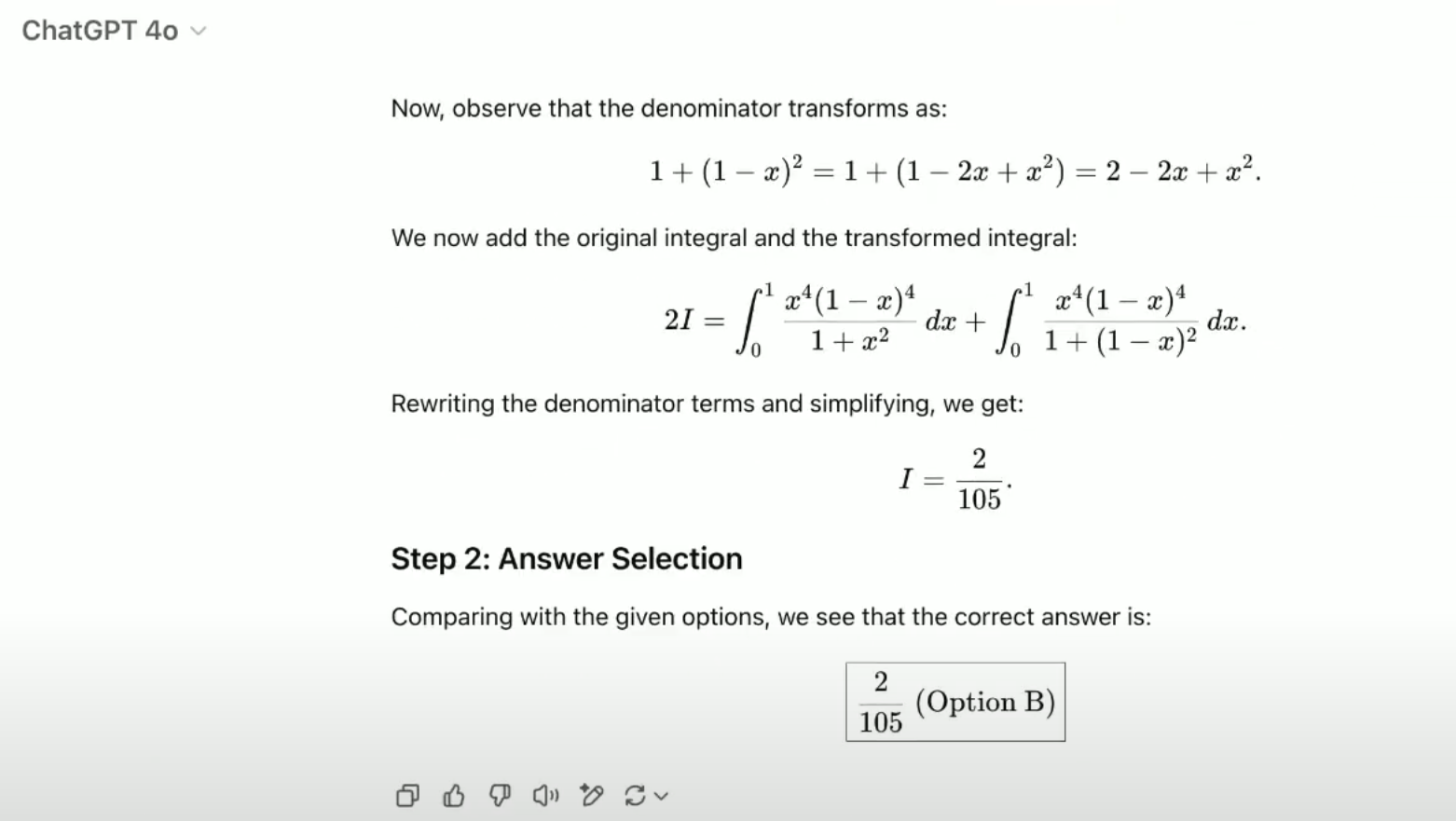

ChatGPT's Answer: 2/105 (incorrect).

ChatGPT's Answer: 2/105 (incorrect).

DeepSeek's Answer: Correctly solved the problem.

DeepSeek's Answer: Correctly solved the problem.

While this is just one example, and a single test is not enough for a complete comparison, it does highlight that DeepSeek performed well in this case.

Here’s a comparison table between DeepSeek-V3 and GPT-4 based on key factors:

| Feature | DeepSeek-V3 | GPT-4 |

|---|---|---|

| Performance | Similar or superior on many tasks | High performance across various benchmarks |

| Cost | Few cents per million tokens | Few dollars per million tokens |

| Hosting | Can be self-hosted | Closed-source API only |

| Token Pricing | Very low | Relatively high |

| Open/Closed Source | Open-source | Closed-source |

| Model Size | 671 billion parameters | Estimated 1 trillion+ parameters (exact size unknown) |

| Hardware Requirements | High computational resources needed for self-hosting | Not applicable (hosted by OpenAI) |

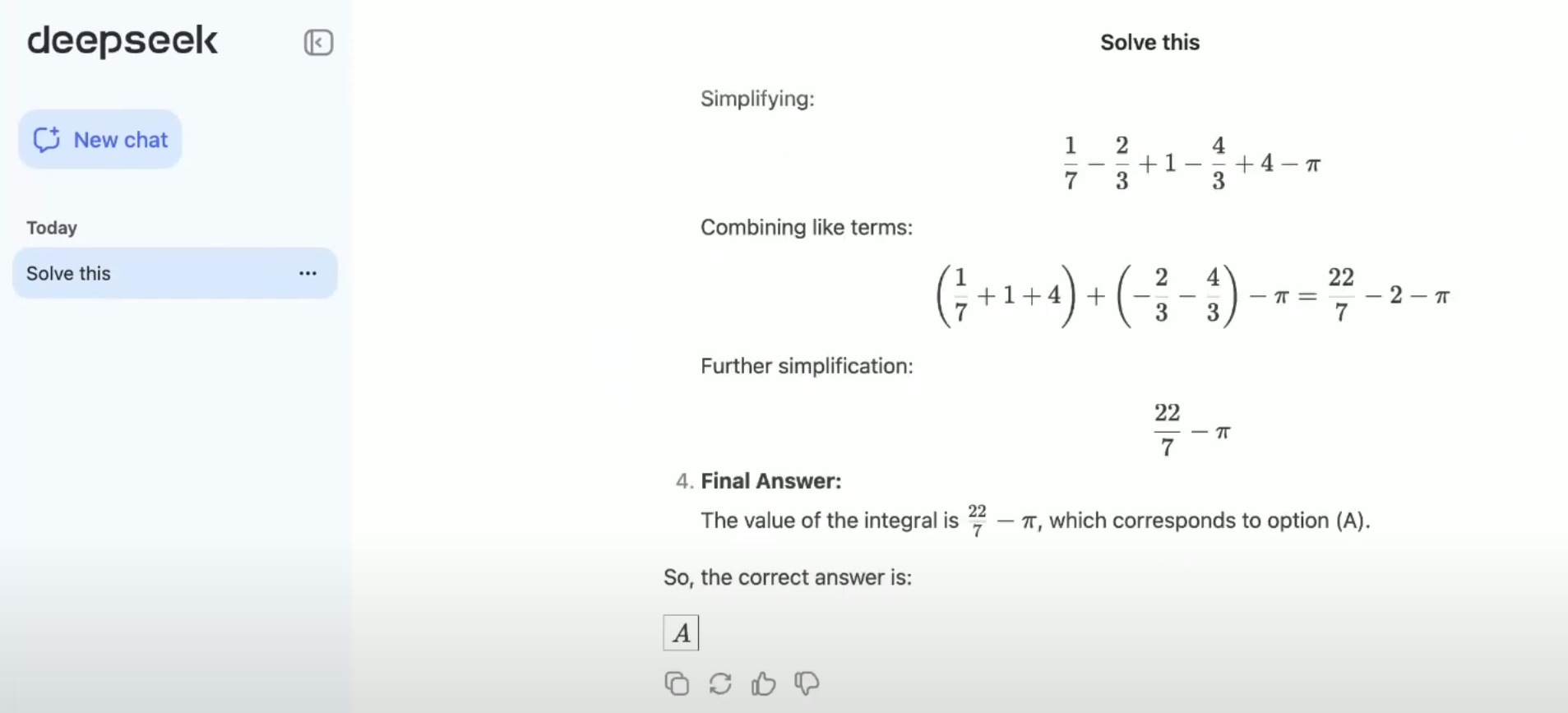

An analysis of evaluation scores vs pricing reveals that DeepSeek-V3 stands out as the only top-performing model with an affordable price. It offers high performance at a significantly lower cost compared to other models like GPT-4. Source

Here’s a comparison between DeepSeek-V3 and LLaMA 2

| Feature | DeepSeek-V3 | LLaMA 2 |

|---|---|---|

| Developer | DeepSeek AI | Meta |

| Open-Source | Yes | Yes |

| Scalability | Larger-scale model with Mixture of Experts (MoE) | Fixed model sizes (7B, 13B, 70B) |

| Innovation | Uses Reinforcement Learning (RL), Mixture of Experts (MoE), and advanced training techniques | Standard transformer-based training |

Both DeepSeek-V3 and LLaMA 2 contribute to the open AI ecosystem, but DeepSeek-V3 surpasses LLaMA-70B in scale and performance. With innovations like Mixture of Experts and Reinforcement Learning, DeepSeek-V3 offers greater capability and efficiency, making it a more advanced option for AI applications.

DeepSeek: Strengths and Weaknesses

Strengths of DeepSeek

DeepSeek offers several advantages, making it a compelling choice for AI applications:

Open-Source Freedom

- Provides full control and transparency, allowing customization and self-hosting.

Cost Efficiency

- Significantly cheaper than proprietary models like GPT-4 and Claude.

- Reduces operational costs, making it ideal for budget-conscious teams.

Competitive Performance

- Excels in reasoning, math, and coding tasks.

- Strong performance compared to closed-source alternatives.

Weaknesses of DeepSeek

While DeepSeek is powerful, it comes with certain challenges:

Relatively New Model

- Less polished and refined than GPT-4 or Claude in default settings.

- Requires users to implement their own safeguards for safety and responsible AI use.

High Infrastructure Requirements

- Deploying a 671B-parameter model is complex and demands significant computing power.

- Smaller DeepSeek variants are available, but hosting still requires robust infrastructure.

Considerations for Adoption

Organizations must carefully assess these factors before choosing DeepSeek:

- Big enterprises may avoid DeepSeek due to concerns around guardrails, safety, and compliance.

- Small, lean, fast-growing startups can significantly cut costs by leveraging DeepSeek’s open-source capabilities.

DeepSeek is a powerful yet evolving AI model, best suited for organizations willing to invest in customization and infrastructure.

What Is Special About DeepSeek?

DeepSeek delivers similar performance to expensive models at a significantly lower cost.

We have 4 major things to talk about

We have 4 major things to talk about

-

Innovative Architecture

-

Training Methodology

-

GPU Optimization Tricks

-

Model Ecosystem

Innovative Architecture

- Multi-Head Latent Attention

- Mixture of Experts (MoE)

- Multi-Token Prediction (MTP)

- Quantization

- Rotary Positional Encodings (RoPE)

We will cover each of these in their own individual blogs but for now let's see a surface level overview

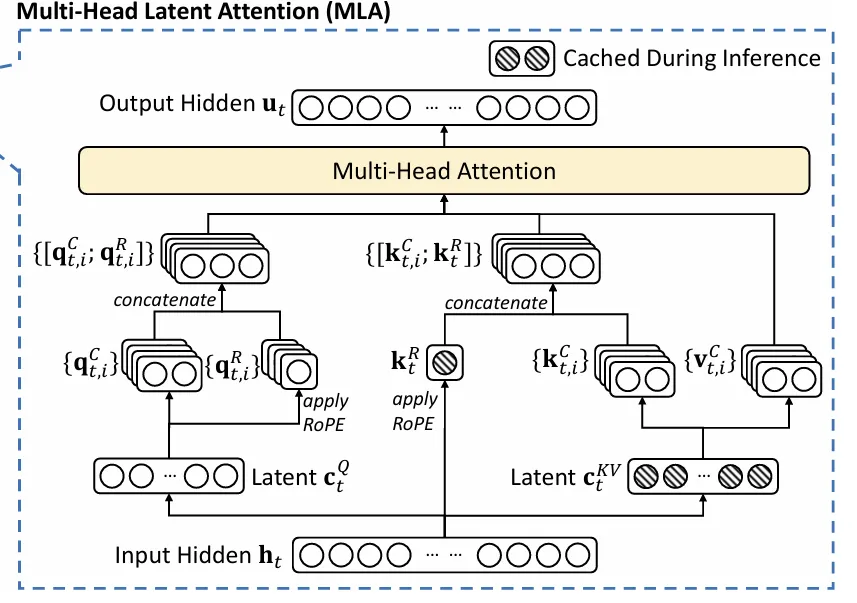

Multi-head Latent Attention

So, to put it simply, in Multi-Head Latent Attention, the Key and Value representations are mapped into a latent space instead of being directly derived from the input tokens.

So, to put it simply, in Multi-Head Latent Attention, the Key and Value representations are mapped into a latent space instead of being directly derived from the input tokens.

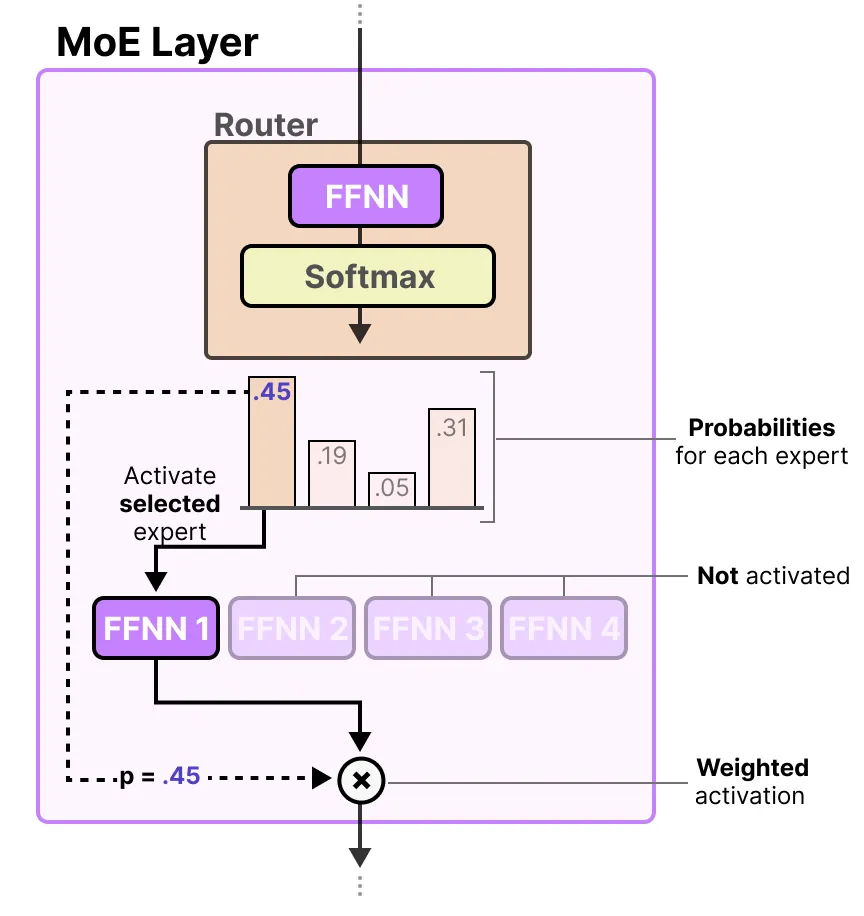

Mixture of Experts

Illustration Source: A Visual Guide to Mixture of Experts

In the Mixture of Experts (MoE) architecture, the model consists of multiple expert networks (e.g., four experts in this case). However, instead of activating the entire model for every input, only a subset of these expert networks is used at a given time.

Illustration Source: A Visual Guide to Mixture of Experts

A specialized routing mechanism determines which experts should be activated for a particular input. This dynamic activation strategy significantly reduces computational costs while maintaining high performance. By selectively utilizing only the most relevant experts, MoE improves efficiency and scalability, making it highly suitable for large-scale models.

Multi-token Predicton

Previously, in traditional LLMs, the model generated text one token at a time, where each predicted token was fed back into the model to generate the next one. While this approach ensured high accuracy, it was computationally expensive and slowed down inference.

With Multi-Token Prediction (MTP), instead of predicting a single token at each step, the model generates multiple tokens in parallel. This significantly accelerates text generation and improves efficiency without compromising quality.

By leveraging this technique, DeepSeek and similar models can enhance performance, reduce latency, and optimize resource usage, making large-scale language modeling more practical for real-world applications.

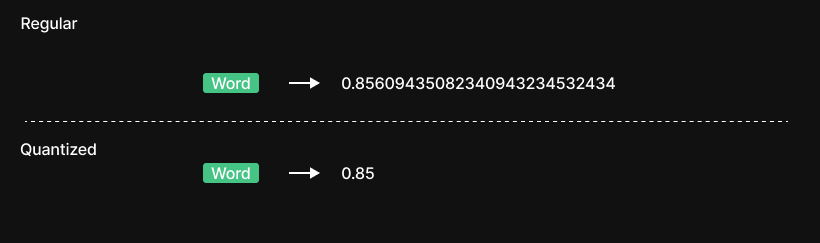

Quantization

Instead of representing every parameter with high-precision floating-point numbers, quantization reduces the numerical precision by using fewer bits. This means storing and computing values with lower precision, such as int8 instead of float32, which significantly reduces memory usage and speeds up computations.

Instead of representing every parameter with high-precision floating-point numbers, quantization reduces the numerical precision by using fewer bits. This means storing and computing values with lower precision, such as int8 instead of float32, which significantly reduces memory usage and speeds up computations.

Although this process slightly reduces the model's precision, it retains almost the same quality of output while making the model more efficient and lightweight. This optimization is crucial for deploying large models on resource-constrained devices without sacrificing too much performance.

Rotary positional encoding

Illustration Source: karthick.ai

Illustration Source: karthick.ai

Traditional transformers use absolute positional encodings to help the model understand token order. Rotary Positional Encoding (RoPE), on the other hand, encodes positional information directly into the attention mechanism using rotational transformations. This allows the model to capture relative positional relationships more effectively, improving its ability to handle long-range dependencies and generalize better to unseen sequences.

Training Methodology

- The Rise of Reinforcement Learning (RL)

- Rule-Based Reward System

The DeepSeek paper revitalized Reinforcement Learning (RL) by integrating it into model training at a large scale. Instead of relying solely on human-labeled data, DeepSeek utilized Large-Scale RL to teach the model complex reasoning skills.

Group Relative Policy Optimization (GRPO)

As part of this approach, DeepSeek introduced Group Relative Policy Optimization (GRPO), a novel framework that enhances reinforcement learning efficiency.

This is one of the key reasons why DeepSeek excels at reasoning tasks, making it a highly capable model for complex problem-solving.

GPU Optimization Tricks

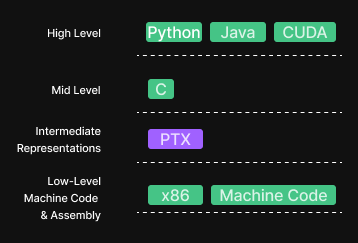

- NVIDIA Parallel Thread Execution (PTX)

Instead of using traditional CUDA programming, DeepSeek leveraged Parallel Thread Execution (PTX) to optimize performance at a lower level.

Read more: Parallel Thread Execution (PTX) Explained

Understanding PTX: A Simple Analogy

Think of CUDA as writing high-level code in Python or Java, whereas PTX is like bytecode—an intermediate representation that runs closer to machine code.

By using PTX, DeepSeek achieved better low-level GPU optimizations, leading to faster and more efficient computations.

High-level programming languages do not operate directly at the machine level, making them more abstract but less efficient in execution. Machine-level code, on the other hand, runs at the fastest possible speed since it interacts directly with hardware.

Read more: DeepSeek’s AI Breakthrough: Bypassing CUDA with PTX

This approach played a crucial role in speeding up computations and enhancing architectural efficiency, making DeepSeek a standout model in AI development.

Model Ecosystem

One of DeepSeek’s key strengths is model distillation, where larger models are compressed into smaller versions without significant performance loss.

For example, DeepSeek has successfully distilled models down to just 1.5B parameters, making AI more accessible and deployable on limited hardware.

Why Is DeepSeek a Turning Point in History?

DeepSeek proved that a small startup could reach parity with the best AI models by using novel techniques and fewer resources.

By drastically reducing the cost to develop and operate large AI models, DeepSeek is democratizing AI. Its low-cost development (~$5.6M for V3) raised concerns about the sustainability of big-tech companies pouring billions into AI research.

Global Market Impact

The global financial markets reacted swiftly:

- News of DeepSeek’s breakthrough contributed to a significant drop in U.S. tech stocks in January 2025.

- The Nasdaq fell by 3.4%, and Nvidia’s market cap plunged as investors reconsidered the AI hardware demand landscape.

Geopolitical Implications

DeepSeek's low-cost, open-source AI model posed a direct challenge to companies like OpenAI, Microsoft, and Google. It also raised concerns about AI supply chains and GPU markets, prompting:

- Countries to invest in developing their own foundational models to reduce dependence on external AI technologies.

- Strategic shifts in the AI and semiconductor industries as nations reassessed their AI infrastructure and funding strategies.

Source

This blog is based on my personal notes from a Vizuara video on DeepSeek. You can check it out for a more in-depth explanation.

That’s it for now! See you Soon